Based on the artwork of Leonardo di ser Piero da Vinci I prowess an idea that would take NASA to another level of compression to the compass of light!! The fact that the word goes in biblical forum "Go into the light" I state in lever a gear for the observation of life in and of itself by merely knowing that life forms would do exactly that action. I have heard in more so related to those 'Near-death experiences' reported on documentaries, often referring to it as very important and life-saving.

Action to form of observation would be understanding basic cell structure, to engage this said in it's simplicity is to apply Occam's Razor, the less complicated answer will produce petri. As known there are comets, meteors and plausibly moons and/or planets that may be subjects to this interesting project. Right now my country the United States of America through V.P. Pence and his grant towards further funding to NASA can process more research towards a simpler research that would involve a beam of light and not the complications of spacecraft.

Aug 21, 2018 - Vice President Mike Pence, with NASA Administrator Jim Bridenstine, will visit NASA's Johnson Space Center in Houston Thursday, Aug. 23, to ..

"...already signed into law historic funding for NASA. And we’ve also fully funded NASA’s most important endeavors from deep-space human exploration" https://www.whitehouse.gov/briefings-statements/remarks-vice-president-pence-administrations-space-policy-priorities-houston-tx/

To the beam of light and following the rule of thumb of those that love to say "Go into the light" as aspect than our ability to direct a beam of light to an object in outer space would product a stream, thus the traveling cell structure to study in a vial. Our ability to trap the bacteria/cell on or in that stream of light would be in the 'probability factor' and currently we have Edwin Thompson Jaynes with the Probability Theory as an extension of logic, study below. To the moment of now only I steady this as much more as NASA should the project endeavor would actually in more than a theory beam a light towards an object and study the attraction or the jump so to speak. Should life in basic cell structure have the ability to transfer from the dark to the light this would show intelligence, this would be proof of life even in it's most basic form, this would be exciting stuff should NASA be able to stop the 'seen' in a vial and in a contained laboratory to study the life form. This project may be affordable and not involve any men placing their own lives in risk to unfamiliar subject matter however at the same exact time we would be able to understand the findings that describe evolution!!

This is an idea, please do not get to excited should you be an Average Reader as there is much study and observation and more probably many men and women that would have to recognize first the idea to even consider a theory. For I, it is what I have in my minds eye and am able to see the concept with ease. From that I have written for memory purposes on the off chance that there is a day that I may communicate in-person just this one idea for better management and distribution as who really knows when the next comet or meteor may be within the distance of NASA and their 'light source': Basic Physics (?).

I am thinking a 'laser' as the light stream may provide the 'creek' mechanism as it would or might be capable of the "Hems and Haws" to provision the filtering baskets for safety, i.e.: Traps. With any prospective project as exciting as this one and with the capability of our laboratories right now NASA could test the 'strip' to actually see if life (bacteria/cell) could survive on the beam and ensure that a 'Trap' could be made 'at process' for ease of collection or dispensary there-of (a bit more thought here as I do not have the computer power to pose these virtuous questions in-ready speed for compression of answer that I require; i.e. frustration!) This function of observation to literal would be paramount and ground breaking as I have yet to read such dynamics as possibility to the actual delivery.

I have not read the 'Chasing a beam of light' by Einstein however I will note it here for my information of booked to study:

Chasing a Beam of Light: Einstein's Most Famous Thought Experiment. ... It shows the untenability of an "emission" theory of light, an approach to electrodynamic theory that Einstein considered seriously and rejected prior to his breakthrough of 1905.

Edwin Thompson Jaynes (July 5, 1922 – April 30,[1] 1998) was the Wayman Crow Distinguished Professor of Physics at Washington University in St. Louis. He wrote extensively on statistical mechanics and on foundations of probability and statistical inference, initiating in 1957 the maximum entropy interpretation of thermodynamics[2][3] as being a particular application of more general Bayesian/information theory techniques (although he argued this was already implicit in the works of Josiah Willard Gibbs). Jaynes strongly promoted the interpretation of probability theory as an extension of logic.

In 1963, together with Fred Cummings, he modeled the evolution of a two-level atom in an electromagnetic field, in a fully quantized way. This model is known as the Jaynes–Cummings model.

A particular focus of his work was the construction of logical principles for assigning prior probability distributions; see the principle of maximum entropy, the principle of transformation groups[4][5] and Laplace's principle of indifference. Other contributions include the mind projection fallacy.

Jaynes' posthumous book, Probability Theory: The Logic of Science (2003) gathers various threads of modern thinking about Bayesian probability and statistical inference, develops the notion of probability theory as extended logic, and contrasts the advantages of Bayesian techniques with the results of other approaches. This book was published posthumously in 2003 (from an incomplete manuscript that was edited by Larry Bretthorst). An unofficial list of errata is hosted by Kevin S. Van Horn. As per Wikipedia at https://en.wikipedia.org/wiki/Edwin_Thompson_Jaynes

Probability theory

Jump to navigation

Jump to search

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of these outcomes is called an event.

Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes, which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion.

Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability theory describing such behaviour are the law of large numbers and the central limit theorem.

As a mathematical foundation for statistics, probability theory is essential to many human activities that involve quantitative analysis of data.[1] Methods of probability theory also apply to descriptions of complex systems given only partial knowledge of their state, as in statistical mechanics. A great discovery of twentieth-century physics was the probabilistic nature of physical phenomena at atomic scales, described in quantum mechanics.[2]

The mathematical theory of probability has its roots in attempts to analyze games of chance by Gerolamo Cardano in the sixteenth century, and by Pierre de Fermat and Blaise Pascal in the seventeenth century (for example the "problem of points"). Christiaan Huygens published a book on the subject in 1657[3] and in the 19th century, Pierre Laplace completed what is today considered the classic interpretation.[4]

| Part of a series on Statistics |

| Probability theory |

|---|

|

Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes, which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion.

Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability theory describing such behaviour are the law of large numbers and the central limit theorem.

As a mathematical foundation for statistics, probability theory is essential to many human activities that involve quantitative analysis of data.[1] Methods of probability theory also apply to descriptions of complex systems given only partial knowledge of their state, as in statistical mechanics. A great discovery of twentieth-century physics was the probabilistic nature of physical phenomena at atomic scales, described in quantum mechanics.[2]

Contents

History of probability

Initially, probability theory mainly considered discrete events, and its methods were mainly combinatorial. Eventually, analytical considerations compelled the incorporation of continuous variables into the theory.

This culminated in modern probability theory, on foundations laid by Andrey Nikolaevich Kolmogorov. Kolmogorov combined the notion of sample space, introduced by Richard von Mises, and measure theory and presented his axiom system for probability theory in 1933. This became the mostly undisputed axiomatic basis for modern probability theory; but, alternatives exist, such as the adoption of finite rather than countable additivity by Bruno de Finetti.[5]

Treatment

Most introductions to probability theory treat discrete probability distributions and continuous probability distributions separately. The measure theory-based treatment of probability covers the discrete, continuous, a mix of the two, and more.Motivation

Consider an experiment that can produce a number of outcomes. The set of all outcomes is called the sample space of the experiment. The power set of the sample space (or equivalently, the event space) is formed by considering all different collections of possible results. For example, rolling an honest die produces one of six possible results. One collection of possible results corresponds to getting an odd number. Thus, the subset {1,3,5} is an element of the power set of the sample space of die rolls. These collections are called events. In this case, {1,3,5} is the event that the die falls on some odd number. If the results that actually occur fall in a given event, that event is said to have occurred.Probability is a way of assigning every "event" a value between zero and one, with the requirement that the event made up of all possible results (in our example, the event {1,2,3,4,5,6}) be assigned a value of one. To qualify as a probability distribution, the assignment of values must satisfy the requirement that if you look at a collection of mutually exclusive events (events that contain no common results, e.g., the events {1,6}, {3}, and {2,4} are all mutually exclusive), the probability that any of these events occurs is given by the sum of the probabilities of the events.[6]

The probability that any one of the events {1,6}, {3}, or {2,4} will occur is 5/6. This is the same as saying that the probability of event {1,2,3,4,6} is 5/6. This event encompasses the possibility of any number except five being rolled. The mutually exclusive event {5} has a probability of 1/6, and the event {1,2,3,4,5,6} has a probability of 1, that is, absolute certainty.

When doing calculations using the outcomes of an experiment, it is necessary that all those elementary events have a number assigned to them. This is done using a random variable. A random variable is a function that assigns to each elementary event in the sample space a real number. This function is usually denoted by a capital letter.[7] In the case of a die, the assignment of a number to a certain elementary events is obvious, namely 1 for 1, 2 for 2, etc. This is not always the case. For example, when flipping a coin the two possible outcomes are "heads" and "tails". In this example, the random variable X could assign to the outcome "heads" the number "0" (

) and to the outcome "tails" the number "1" (

) and to the outcome "tails" the number "1" ( ).

).

Discrete probability distributions

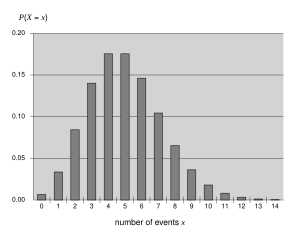

The Poisson distribution, a discrete probability distribution.

Examples: Throwing dice, experiments with decks of cards, random walk, and tossing coins

Classical definition: Initially the probability of an event to occur was defined as the number of cases favorable for the event, over the number of total outcomes possible in an equiprobable sample space: see Classical definition of probability.

For example, if the event is "occurrence of an even number when a die is rolled", the probability is given by

, since 3 faces out of the 6 have even numbers and each face has the same probability of appearing.

, since 3 faces out of the 6 have even numbers and each face has the same probability of appearing.

Modern definition: The modern definition starts with a finite or countable set called the sample space, which relates to the set of all possible outcomes in classical sense, denoted by

. It is then assumed that for each element

. It is then assumed that for each element  , an intrinsic "probability" value

, an intrinsic "probability" value  is attached, which satisfies the following properties:

is attached, which satisfies the following properties:

of the sample space

of the sample space  . The probability of the event

. The probability of the event  is defined as

is defined as

The function

mapping a point in the sample space to the "probability" value is called a probability mass function abbreviated as pmf.

The modern definition does not try to answer how probability mass

functions are obtained; instead, it builds a theory that assumes their

existence[citation needed].

mapping a point in the sample space to the "probability" value is called a probability mass function abbreviated as pmf.

The modern definition does not try to answer how probability mass

functions are obtained; instead, it builds a theory that assumes their

existence[citation needed].

Continuous probability distributions

The normal distribution, a continuous probability distribution.

Classical definition: The classical definition breaks down when confronted with the continuous case. See Bertrand's paradox.

Modern definition: If the outcome space of a random variable X is the set of real numbers (

) or a subset thereof, then a function called the cumulative distribution function (or cdf)

) or a subset thereof, then a function called the cumulative distribution function (or cdf)  exists, defined by

exists, defined by  . That is, F(x) returns the probability that X will be less than or equal to x.

. That is, F(x) returns the probability that X will be less than or equal to x.

The cdf necessarily satisfies the following properties.

is a monotonically non-decreasing, right-continuous function;

is absolutely continuous, i.e., its derivative exists and integrating the derivative gives us the cdf back again, then the random variable X is said to have a probability density function or pdf or simply density

is absolutely continuous, i.e., its derivative exists and integrating the derivative gives us the cdf back again, then the random variable X is said to have a probability density function or pdf or simply density

For a set

, the probability of the random variable X being in

, the probability of the random variable X being in  is

is

These concepts can be generalized for multidimensional cases on

and other continuous sample spaces.

and other continuous sample spaces.

Measure-theoretic probability theory

The raison d'être of the measure-theoretic treatment of probability is that it unifies the discrete and the continuous cases, and makes the difference a question of which measure is used. Furthermore, it covers distributions that are neither discrete nor continuous nor mixtures of the two.An example of such distributions could be a mix of discrete and continuous distributions—for example, a random variable that is 0 with probability 1/2, and takes a random value from a normal distribution with probability 1/2. It can still be studied to some extent by considering it to have a pdf of

![(\delta [x]+\varphi (x))/2](https://wikimedia.org/api/rest_v1/media/math/render/svg/b59e7c343760eb33e805760c20aff9d9c3831260) , where

, where ![\delta [x]](https://wikimedia.org/api/rest_v1/media/math/render/svg/3953eacd8865ec4c182566de1728419117164d2c) is the Dirac delta function.

is the Dirac delta function.

Other distributions may not even be a mix, for example, the Cantor distribution has no positive probability for any single point, neither does it have a density. The modern approach to probability theory solves these problems using measure theory to define the probability space:

Given any set

(also called sample space) and a σ-algebra

(also called sample space) and a σ-algebra  on it, a measure

on it, a measure  defined on

defined on  is called a probability measure if

is called a probability measure if

If

is the Borel σ-algebra on the set of real numbers, then there is a unique probability measure on

is the Borel σ-algebra on the set of real numbers, then there is a unique probability measure on  for any cdf, and vice versa. The measure corresponding to a cdf is said to be induced

by the cdf. This measure coincides with the pmf for discrete variables

and pdf for continuous variables, making the measure-theoretic approach

free of fallacies.

for any cdf, and vice versa. The measure corresponding to a cdf is said to be induced

by the cdf. This measure coincides with the pmf for discrete variables

and pdf for continuous variables, making the measure-theoretic approach

free of fallacies.

The probability of a set

in the σ-algebra

in the σ-algebra  is defined as

is defined as

induced by

induced by

Along with providing better understanding and unification of discrete and continuous probabilities, measure-theoretic treatment also allows us to work on probabilities outside

, as in the theory of stochastic processes. For example, to study Brownian motion, probability is defined on a space of functions.

, as in the theory of stochastic processes. For example, to study Brownian motion, probability is defined on a space of functions.

When it's convenient to work with a dominating measure, the Radon-Nikodym theorem is used to define a density as the Radon-Nikodym derivative of the probability distribution of interest with respect to this dominating measure. Discrete densities are usually defined as this derivative with respect to a counting measure over the set of all possible outcomes. Densities for absolutely continuous distributions are usually defined as this derivative with respect to the Lebesgue measure. If a theorem can be proved in this general setting, it holds for both discrete and continuous distributions as well as others; separate proofs are not required for discrete and continuous distributions.

Classical probability distributions

Certain random variables occur very often in probability theory because they well describe many natural or physical processes. Their distributions, therefore, have gained special importance in probability theory. Some fundamental discrete distributions are the discrete uniform, Bernoulli, binomial, negative binomial, Poisson and geometric distributions. Important continuous distributions include the continuous uniform, normal, exponential, gamma and beta distributions.Convergence of random variables

In probability theory, there are several notions of convergence for random variables. They are listed below in the order of strength, i.e., any subsequent notion of convergence in the list implies convergence according to all of the preceding notions.- Weak convergence

- A sequence of random variables

converges weakly to the random variable

if their respective cumulative distribution functions

converge to the cumulative distribution function

of

, wherever

is continuous. Weak convergence is also called convergence in distribution.

- Most common shorthand notation:

- Convergence in probability

- The sequence of random variables

is said to converge towards the random variable

in probability if

for every ε > 0.

- Most common shorthand notation:

- Strong convergence

- The sequence of random variables

is said to converge towards the random variable

strongly if

. Strong convergence is also known as almost sure convergence.

- Most common shorthand notation:

Law of large numbers

Common intuition suggests that if a fair coin is tossed many times, then roughly half of the time it will turn up heads, and the other half it will turn up tails. Furthermore, the more often the coin is tossed, the more likely it should be that the ratio of the number of heads to the number of tails will approach unity. Modern probability theory provides a formal version of this intuitive idea, known as the law of large numbers. This law is remarkable because it is not assumed in the foundations of probability theory, but instead emerges from these foundations as a theorem. Since it links theoretically derived probabilities to their actual frequency of occurrence in the real world, the law of large numbers is considered as a pillar in the history of statistical theory and has had widespread influence.[8]The law of large numbers (LLN) states that the sample average

converges towards their common expectation

converges towards their common expectation  , provided that the expectation of

, provided that the expectation of  is finite.

is finite.

It is in the different forms of convergence of random variables that separates the weak and the strong law of large numbers

- Weak law:

for

- Strong law:

for

For example, if

are independent Bernoulli random variables taking values 1 with probability p and 0 with probability 1-p, then

are independent Bernoulli random variables taking values 1 with probability p and 0 with probability 1-p, then  for all i, so that

for all i, so that  converges to p almost surely.

converges to p almost surely.

Central limit theorem

"The central limit theorem (CLT) is one of the great results of mathematics." (Chapter 18 in[9]) It explains the ubiquitous occurrence of the normal distribution in nature.The theorem states that the average of many independent and identically distributed random variables with finite variance tends towards a normal distribution irrespective of the distribution followed by the original random variables. Formally, let

be independent random variables with mean

be independent random variables with mean  and variance

and variance  Then the sequence of random variables

Then the sequence of random variables

For some classes of random variables the classic central limit theorem works rather fast (see Berry–Esseen theorem), for example the distributions with finite first, second, and third moment from the exponential family; on the other hand, for some random variables of the heavy tail and fat tail variety, it works very slowly or may not work at all: in such cases one may use the Generalized Central Limit Theorem (GCLT).

See also

- Catalog of articles in probability theory

- Expected value and Variance

- Fuzzy logic and Fuzzy measure theory

- Glossary of probability and statistics

- Likelihood function

- List of probability topics

- List of publications in statistics

- List of statistical topics

- Notation in probability

- Predictive modelling

- Probabilistic logic – A combination of probability theory and logic

- Probabilistic proofs of non-probabilistic theorems

- Probability distribution

- Probability axioms

- Probability interpretations

- Probability space

- Statistical independence

- Subjective logic

Notes

- David Williams, "Probability with martingales", Cambridge 1991/2008

References

This article includes a list of references, but its sources remain unclear because it has insufficient inline citations. (September 2009) (Learn how and when to remove this template message)

|

- Pierre Simon de Laplace (1812). Analytical Theory of Probability.

-

- The first major treatise blending calculus with probability theory, originally in French: Théorie Analytique des Probabilités.

- A. Kolmogoroff (1933). Grundbegriffe der Wahrscheinlichkeitsrechnung. doi:10.1007/978-3-642-49888-6. ISBN 978-3-642-49888-6.

-

- An English translation by Nathan Morrison appeared under the title Foundations of the Theory of Probability (Chelsea, New York) in 1950, with a second edition in 1956.

- Patrick Billingsley (1979). Probability and Measure. New York, Toronto, London: John Wiley and Sons.

- Olav Kallenberg; Foundations of Modern Probability, 2nd ed. Springer Series in Statistics. (2002). 650 pp. ISBN 0-387-95313-2

- Henk Tijms (2004). Understanding Probability. Cambridge Univ. Press.

-

- A lively introduction to probability theory for the beginner.

- Olav Kallenberg; Probabilistic Symmetries and Invariance Principles. Springer -Verlag, New York (2005). 510 pp. ISBN 0-387-25115-4

- Gut, Allan (2005). Probability: A Graduate Course. Springer-Verlag. ISBN 0-387-22833-0.

External links

- Probability Theory demonstrated in the Galton Board

- Animation on YouTube on the probability space of dice.

Languages

Logic

Jump to navigation

Jump to search

Logic (from the Ancient Greek: λογική, translit. logikḗ[1]),

originally meaning "the word" or "what is spoken", but coming to mean

"thought" or "reason", is a subject concerned with the most general laws

of truth,[2] and is now generally held to consist of the systematic study of the form of valid inference.

A valid inference is one where there is a specific relation of logical

support between the assumptions of the inference and its conclusion. (In ordinary discourse, inferences may be signified by words such as therefore, hence, ergo, and so on.)

There is no universal agreement as to the exact scope and subject matter of logic (see § Rival conceptions, below), but it has traditionally included the classification of arguments, the systematic exposition of the 'logical form' common to all valid arguments, the study of inference, including fallacies, and the study of semantics, including paradoxes. Historically, logic has been studied in philosophy (since ancient times) and mathematics (since the mid-19th century), and recently logic has been studied in computer science, linguistics, psychology, and other fields.

| Part of a series on |

| Philosophy |

|---|

|

| Philosophers |

| Traditions |

| Periods |

| Literature |

| Branches |

| Lists |

| Miscellaneous |

|

|

There is no universal agreement as to the exact scope and subject matter of logic (see § Rival conceptions, below), but it has traditionally included the classification of arguments, the systematic exposition of the 'logical form' common to all valid arguments, the study of inference, including fallacies, and the study of semantics, including paradoxes. Historically, logic has been studied in philosophy (since ancient times) and mathematics (since the mid-19th century), and recently logic has been studied in computer science, linguistics, psychology, and other fields.

Contents

Concepts

| “ | Upon this first, and in one sense this sole, rule of reason, that in order to learn you must desire to learn, and in so desiring not be satisfied with what you already incline to capably think, there follows one corollary which itself deserves to be inscribed upon every wall of the city of philosophy: Do not block the way of inquiry. | ” |

| — Charles Sanders Peirce, "First Rule of Logic" | ||

- Informal logic is the study of natural language arguments. The study of fallacies is an important branch of informal logic. Since much informal argument is not strictly speaking deductive, on some conceptions of logic, informal logic is not logic at all. See 'Rival conceptions', below.

- Formal logic is the study of inference with purely formal content. An inference possesses a purely formal content if it can be expressed as a particular application of a wholly abstract rule, that is, a rule that is not about any particular thing or property. The works of Aristotle contain the earliest known formal study of logic. Modern formal logic follows and expands on Aristotle.[3] In many definitions of logic, logical inference and inference with purely formal content are the same. This does not render the notion of informal logic vacuous, because no formal logic captures all of the nuances of natural language.

- Symbolic logic is the study of symbolic abstractions that capture the formal features of logical inference.[4][5] Symbolic logic is often divided into two main branches: propositional logic and predicate logic.

- Mathematical logic is an extension of symbolic logic into other areas, in particular to the study of model theory, proof theory, set theory, and recursion theory.

Logical form

Logic is generally considered formal when it analyzes and represents the form of any valid argument type. The form of an argument is displayed by representing its sentences in the formal grammar and symbolism of a logical language to make its content usable in formal inference. Simply put, to formalize simply means to translate English sentences into the language of logic.This is called showing the logical form of the argument. It is necessary because indicative sentences of ordinary language show a considerable variety of form and complexity that makes their use in inference impractical. It requires, first, ignoring those grammatical features irrelevant to logic (such as gender and declension, if the argument is in Latin), replacing conjunctions irrelevant to logic (such as "but") with logical conjunctions like "and" and replacing ambiguous, or alternative logical expressions ("any", "every", etc.) with expressions of a standard type (such as "all", or the universal quantifier ∀).

Second, certain parts of the sentence must be replaced with schematic letters. Thus, for example, the expression "all Ps are Qs" shows the logical form common to the sentences "all men are mortals", "all cats are carnivores", "all Greeks are philosophers", and so on. The schema can further be condensed into the formula A(P,Q), where the letter A indicates the judgement 'all - are -'.

The importance of form was recognised from ancient times. Aristotle uses variable letters to represent valid inferences in Prior Analytics, leading Jan Łukasiewicz to say that the introduction of variables was "one of Aristotle's greatest inventions".[7] According to the followers of Aristotle (such as Ammonius), only the logical principles stated in schematic terms belong to logic, not those given in concrete terms. The concrete terms "man", "mortal", etc., are analogous to the substitution values of the schematic placeholders P, Q, R, which were called the "matter" (Greek hyle) of the inference.

There is a big difference between the kinds of formulas seen in traditional term logic and the predicate calculus that is the fundamental advance of modern logic. The formula A(P,Q) (all Ps are Qs) of traditional logic corresponds to the more complex formula

in predicate logic, involving the logical connectives for universal quantification and implication rather than just the predicate letter A and using variable arguments

in predicate logic, involving the logical connectives for universal quantification and implication rather than just the predicate letter A and using variable arguments  where traditional logic uses just the term letter P. With the complexity comes power, and the advent of the predicate calculus inaugurated revolutionary growth of the subject.

where traditional logic uses just the term letter P. With the complexity comes power, and the advent of the predicate calculus inaugurated revolutionary growth of the subject.

Semantics

The validity of an argument depends upon the meaning or semantics of the sentences that make it up.Aristotle's Organon, especially On Interpretation, gives a cursory outline of semantics which the scholastic logicians, particularly in the thirteenth and fourteenth century, developed into a complex and sophisticated theory, called Supposition Theory. This showed how the truth of simple sentences, expressed schematically, depend on how the terms 'supposit' or stand for certain extra-linguistic items. For example, in part II of his Summa Logicae, William of Ockham presents a comprehensive account of the necessary and sufficient conditions for the truth of simple sentences, in order to show which arguments are valid and which are not. Thus "every A is B' is true if and only if there is something for which 'A' stands, and there is nothing for which 'A' stands, for which 'B' does not also stand." [8]

Early modern logic defined semantics purely as a relation between ideas. Antoine Arnauld in the Port Royal Logic, says that 'after conceiving things by our ideas, we compare these ideas, and, finding that some belong together and some do not, we unite or separate them. This is called affirming or denying, and in general judging.[9] Thus truth and falsity are no more than the agreement or disagreement of ideas. This suggests obvious difficulties, leading Locke to distinguish between 'real' truth, when our ideas have 'real existence' and 'imaginary' or 'verbal' truth, where ideas like harpies or centaurs exist only in the mind.[10] This view (psychologism) was taken to the extreme in the nineteenth century, and is generally held by modern logicians to signify a low point in the decline of logic before the twentieth century.

Modern semantics is in some ways closer to the medieval view, in rejecting such psychological truth-conditions. However, the introduction of quantification, needed to solve the problem of multiple generality, rendered impossible the kind of subject-predicate analysis that underlies medieval semantics. The main modern approach is model-theoretic semantics, based on Alfred Tarski's semantic theory of truth. The approach assumes that the meaning of the various parts of the propositions are given by the possible ways we can give a recursively specified group of interpretation functions from them to some predefined domain of discourse: an interpretation of first-order predicate logic is given by a mapping from terms to a universe of individuals, and a mapping from propositions to the truth values "true" and "false". Model-theoretic semantics is one of the fundamental concepts of model theory. Modern semantics also admits rival approaches, such as the proof-theoretic semantics that associates the meaning of propositions with the roles that they can play in inferences, an approach that ultimately derives from the work of Gerhard Gentzen on structural proof theory and is heavily influenced by Ludwig Wittgenstein's later philosophy, especially his aphorism "meaning is use".

Inference

Inference is not to be confused with implication. An implication is a sentence of the form 'If p then q', and can be true or false. The Stoic logician Philo of Megara was the first to define the truth conditions of such an implication: false only when the antecedent p is true and the consequent q is false, in all other cases true. An inference, on the other hand, consists of two separately asserted propositions of the form 'p therefore q'. An inference is not true or false, but valid or invalid. However, there is a connection between implication and inference, as follows: if the implication 'if p then q' is true, the inference 'p therefore q' is valid. This was given an apparently paradoxical formulation by Philo, who said that the implication 'if it is day, it is night' is true only at night, so the inference 'it is day, therefore it is night' is valid in the night, but not in the day.The theory of inference (or 'consequences') was systematically developed in medieval times by logicians such as William of Ockham and Walter Burley. It is uniquely medieval, though it has its origins in Aristotle's Topics and Boethius' De Syllogismis hypotheticis. This is why many terms in logic are Latin. For example, the rule that licenses the move from the implication 'if p then q' plus the assertion of its antecedent p, to the assertion of the consequent q is known as modus ponens (or 'mode of positing'). Its Latin formulation is 'Posito antecedente ponitur consequens'. The Latin formulations of many other rules such as 'ex falso quodlibet' (anything follows from a falsehood), 'reductio ad absurdum' (disproof by showing the consequence is absurd) also date from this period.

However, the theory of consequences, or of the so-called 'hypothetical syllogism' was never fully integrated into the theory of the 'categorical syllogism'. This was partly because of the resistance to reducing the categorical judgment 'Every S is P' to the so-called hypothetical judgment 'if anything is S, it is P'. The first was thought to imply 'some S is P', the second was not, and as late as 1911 in the Encyclopædia Britannica article on Logic, we find the Oxford logician T.H. Case arguing against Sigwart's and Brentano's modern analysis of the universal proposition.

Logical systems

A formal system is an organization of terms used for the analysis of deduction. It consists of an alphabet, a language over the alphabet to construct sentences, and a rule for deriving sentences. Among the important properties that logical systems can have are:- Consistency, which means that no theorem of the system contradicts another.[11]

- Validity, which means that the system's rules of proof never allow a false inference from true premises.

- Completeness, which means that if a formula is true, it can be proven, i.e. is a theorem of the system.

- Soundness, meaning that if any formula is a theorem of the system, it is true. This is the converse of completeness. (Note that in a distinct philosophical use of the term, an argument is sound when it is both valid and its premises are true).[12]

Logic and rationality

This section may be confusing or unclear to readers. (May 2016) (Learn how and when to remove this template message)

|

Deductive reasoning concerns the logical consequence of given premises and is the form of reasoning most closely connected to logic. On a narrow conception of logic (see below) logic concerns just deductive reasoning, although such a narrow conception controversially excludes most of what is called informal logic from the discipline.

There are other forms of reasoning that are rational but that are generally not taken to be part of logic. These include inductive reasoning, which covers forms of inference that move from collections of particular judgements to universal judgements, and abductive reasoning,[14] which is a form of inference that goes from observation to a hypothesis that accounts for the reliable data (observation) and seeks to explain relevant evidence. The American philosopher Charles Sanders Peirce (1839–1914) first introduced the term as "guessing".[15] Peirce said that to abduce a hypothetical explanation

from an observed surprising circumstance

from an observed surprising circumstance  is to surmise that

is to surmise that  may be true because then

may be true because then  would be a matter of course.[16] Thus, to abduce

would be a matter of course.[16] Thus, to abduce  from

from  involves determining that

involves determining that  is sufficient (or nearly sufficient), but not necessary, for

is sufficient (or nearly sufficient), but not necessary, for  .

.

While inductive and abductive inference are not part of logic proper, the methodology of logic has been applied to them with some degree of success. For example, the notion of deductive validity (where an inference is deductively valid if and only if there is no possible situation in which all the premises are true but the conclusion false) exists in an analogy to the notion of inductive validity, or "strength", where an inference is inductively strong if and only if its premises give some degree of probability to its conclusion. Whereas the notion of deductive validity can be rigorously stated for systems of formal logic in terms of the well-understood notions of semantics, inductive validity requires us to define a reliable generalization of some set of observations. The task of providing this definition may be approached in various ways, some less formal than others; some of these definitions may use logical association rule induction, while others may use mathematical models of probability such as decision trees.

Rival conceptions

Logic arose (see below) from a concern with correctness of argumentation. Modern logicians usually wish to ensure that logic studies just those arguments that arise from appropriately general forms of inference. For example, Thomas Hofweber writes in the Stanford Encyclopedia of Philosophy that logic "does not, however, cover good reasoning as a whole. That is the job of the theory of rationality. Rather it deals with inferences whose validity can be traced back to the formal features of the representations that are involved in that inference, be they linguistic, mental, or other representations."[17]Logic has been defined[by whom?] as "the study of arguments correct in virtue of their form". This has not been the definition taken in this article, but the idea that logic treats special forms of argument, deductive argument, rather than argument in general, has a history in logic that dates back at least to logicism in mathematics (19th and 20th centuries) and the advent of the influence of mathematical logic on philosophy. A consequence of taking logic to treat special kinds of argument is that it leads to identification of special kinds of truth, the logical truths (with logic equivalently being the study of logical truth), and excludes many of the original objects of study of logic that are treated as informal logic. Robert Brandom has argued against the idea that logic is the study of a special kind of logical truth, arguing that instead one can talk of the logic of material inference (in the terminology of Wilfred Sellars), with logic making explicit the commitments that were originally implicit in informal inference.[18][page needed]

History

Aristotle, 384–322 BCE.

The Chinese logical philosopher Gongsun Long (c. 325–250 BCE) proposed the paradox "One and one cannot become two, since neither becomes two."[25] In China, the tradition of scholarly investigation into logic, however, was repressed by the Qin dynasty following the legalist philosophy of Han Feizi.

In India, the Anviksiki school of logic was founded by Medhatithi Gautama (c. 6th century BCE).[26] Innovations in the scholastic school, called Nyaya, continued from ancient times into the early 18th century with the Navya-Nyaya school. By the 16th century, it developed theories resembling modern logic, such as Gottlob Frege's "distinction between sense and reference of proper names" and his "definition of number", as well as the theory of "restrictive conditions for universals" anticipating some of the developments in modern set theory.[27] Since 1824, Indian logic attracted the attention of many Western scholars, and has had an influence on important 19th-century logicians such as Charles Babbage, Augustus De Morgan, and George Boole.[28] In the 20th century, Western philosophers like Stanislaw Schayer and Klaus Glashoff have explored Indian logic more extensively.

The syllogistic logic developed by Aristotle predominated in the West until the mid-19th century, when interest in the foundations of mathematics stimulated the development of symbolic logic (now called mathematical logic). In 1854, George Boole published An Investigation of the Laws of Thought on Which are Founded the Mathematical Theories of Logic and Probabilities, introducing symbolic logic and the principles of what is now known as Boolean logic. In 1879, Gottlob Frege published Begriffsschrift, which inaugurated modern logic with the invention of quantifier notation. From 1910 to 1913, Alfred North Whitehead and Bertrand Russell published Principia Mathematica[4] on the foundations of mathematics, attempting to derive mathematical truths from axioms and inference rules in symbolic logic. In 1931, Gödel raised serious problems with the foundationalist program and logic ceased to focus on such issues.

The development of logic since Frege, Russell, and Wittgenstein had a profound influence on the practice of philosophy and the perceived nature of philosophical problems (see analytic philosophy) and philosophy of mathematics. Logic, especially sentential logic, is implemented in computer logic circuits and is fundamental to computer science. Logic is commonly taught by university philosophy departments, often as a compulsory discipline.

Types

Syllogistic logic

A depiction from the 15th century of the square of opposition, which expresses the fundamental dualities of syllogistic.

Aristotle's work was regarded in classical times and from medieval times in Europe and the Middle East as the very picture of a fully worked out system. However, it was not alone: the Stoics proposed a system of propositional logic that was studied by medieval logicians. Also, the problem of multiple generality was recognized in medieval times. Nonetheless, problems with syllogistic logic were not seen as being in need of revolutionary solutions.

Today, some academics claim that Aristotle's system is generally seen as having little more than historical value (though there is some current interest in extending term logics), regarded as made obsolete by the advent of propositional logic and the predicate calculus. Others use Aristotle in argumentation theory to help develop and critically question argumentation schemes that are used in artificial intelligence and legal arguments.

I was upset. I had always believed logic was a universal weapon, and now I realized how its validity depended on the way it was employed.[30]

Propositional logic

A propositional calculus or logic (also a sentential calculus) is a formal system in which formulae representing propositions can be formed by combining atomic propositions using logical connectives, and in which a system of formal proof rules establishes certain formulae as "theorems". An example of a theorem of propositional logic is , which says that if A holds, then B implies A.

, which says that if A holds, then B implies A.

Predicate logic

Gottlob Frege's Begriffschrift introduced the notion of quantifier in a graphical notation, which here represents the judgement that  is true.

is true.

is true.

is true. , using the non-logical predicate

, using the non-logical predicate  to indicate that x is a man, and the non-logical relation

to indicate that x is a man, and the non-logical relation  to indicate that x shaves y; all other symbols of the formulae are logical, expressing the universal and existential quantifiers, conjunction, implication, negation and biconditional.

to indicate that x shaves y; all other symbols of the formulae are logical, expressing the universal and existential quantifiers, conjunction, implication, negation and biconditional.

Whilst Aristotelian syllogistic logic specifies a small number of forms that the relevant part of the involved judgements may take, predicate logic allows sentences to be analysed into subject and argument in several additional ways—allowing predicate logic to solve the problem of multiple generality that had perplexed medieval logicians.

The development of predicate logic is usually attributed to Gottlob Frege, who is also credited as one of the founders of analytical philosophy, but the formulation of predicate logic most often used today is the first-order logic presented in Principles of Mathematical Logic by David Hilbert and Wilhelm Ackermann in 1928. The analytical generality of predicate logic allowed the formalization of mathematics, drove the investigation of set theory, and allowed the development of Alfred Tarski's approach to model theory. It provides the foundation of modern mathematical logic.

Frege's original system of predicate logic was second-order, rather than first-order. Second-order logic is most prominently defended (against the criticism of Willard Van Orman Quine and others) by George Boolos and Stewart Shapiro.[citation needed]

Modal logic

In languages, modality deals with the phenomenon that sub-parts of a sentence may have their semantics modified by special verbs or modal particles. For example, "We go to the games" can be modified to give "We should go to the games", and "We can go to the games" and perhaps "We will go to the games". More abstractly, we might say that modality affects the circumstances in which we take an assertion to be satisfied. Confusing modality is known as the modal fallacy.Aristotle's logic is in large parts concerned with the theory of non-modalized logic. Although, there are passages in his work, such as the famous sea-battle argument in De Interpretatione § 9, that are now seen as anticipations of modal logic and its connection with potentiality and time, the earliest formal system of modal logic was developed by Avicenna, who ultimately developed a theory of "temporally modalized" syllogistic.[31]

While the study of necessity and possibility remained important to philosophers, little logical innovation happened until the landmark investigations of Clarence Irving Lewis in 1918, who formulated a family of rival axiomatizations of the alethic modalities. His work unleashed a torrent of new work on the topic, expanding the kinds of modality treated to include deontic logic and epistemic logic. The seminal work of Arthur Prior applied the same formal language to treat temporal logic and paved the way for the marriage of the two subjects. Saul Kripke discovered (contemporaneously with rivals) his theory of frame semantics, which revolutionized the formal technology available to modal logicians and gave a new graph-theoretic way of looking at modality that has driven many applications in computational linguistics and computer science, such as dynamic logic.

Informal reasoning and dialectic

The motivation for the study of logic in ancient times was clear: it is so that one may learn to distinguish good arguments from bad arguments, and so become more effective in argument and oratory, and perhaps also to become a better person. Half of the works of Aristotle's Organon treat inference as it occurs in an informal setting, side by side with the development of the syllogistic, and in the Aristotelian school, these informal works on logic were seen as complementary to Aristotle's treatment of rhetoric.This ancient motivation is still alive, although it no longer takes centre stage in the picture of logic; typically dialectical logic forms the heart of a course in critical thinking, a compulsory course at many universities. Dialectic has been linked to logic since ancient times, but it has not been until recent decades that European and American logicians have attempted to provide mathematical foundations for logic and dialectic by formalising dialectical logic. Dialectical logic is also the name given to the special treatment of dialectic in Hegelian and Marxist thought. There have been pre-formal treatises on argument and dialectic, from authors such as Stephen Toulmin (The Uses of Argument), Nicholas Rescher (Dialectics),[32][33][34] and van Eemeren and Grootendorst (Pragma-dialectics). Theories of defeasible reasoning can provide a foundation for the formalisation of dialectical logic and dialectic itself can be formalised as moves in a game, where an advocate for the truth of a proposition and an opponent argue. Such games can provide a formal game semantics for many logics.

Argumentation theory is the study and research of informal logic, fallacies, and critical questions as they relate to every day and practical situations. Specific types of dialogue can be analyzed and questioned to reveal premises, conclusions, and fallacies. Argumentation theory is now applied in artificial intelligence and law.

Mathematical logic

Mathematical logic comprises two distinct areas of research: the first is the application of the techniques of formal logic to mathematics and mathematical reasoning, and the second, in the other direction, the application of mathematical techniques to the representation and analysis of formal logic.[35]The earliest use of mathematics and geometry in relation to logic and philosophy goes back to the ancient Greeks such as Euclid, Plato, and Aristotle.[36] Many other ancient and medieval philosophers applied mathematical ideas and methods to their philosophical claims.[37]

One of the boldest attempts to apply logic to mathematics was the logicism pioneered by philosopher-logicians such as Gottlob Frege and Bertrand Russell. Mathematical theories were supposed to be logical tautologies, and the programme was to show this by means of a reduction of mathematics to logic.[4] The various attempts to carry this out met with failure, from the crippling of Frege's project in his Grundgesetze by Russell's paradox, to the defeat of Hilbert's program by Gödel's incompleteness theorems.

Both the statement of Hilbert's program and its refutation by Gödel depended upon their work establishing the second area of mathematical logic, the application of mathematics to logic in the form of proof theory.[38] Despite the negative nature of the incompleteness theorems, Gödel's completeness theorem, a result in model theory and another application of mathematics to logic, can be understood as showing how close logicism came to being true: every rigorously defined mathematical theory can be exactly captured by a first-order logical theory; Frege's proof calculus is enough to describe the whole of mathematics, though not equivalent to it.

If proof theory and model theory have been the foundation of mathematical logic, they have been but two of the four pillars of the subject.[39] Set theory originated in the study of the infinite by Georg Cantor, and it has been the source of many of the most challenging and important issues in mathematical logic, from Cantor's theorem, through the status of the Axiom of Choice and the question of the independence of the continuum hypothesis, to the modern debate on large cardinal axioms.

Recursion theory captures the idea of computation in logical and arithmetic terms; its most classical achievements are the undecidability of the Entscheidungsproblem by Alan Turing, and his presentation of the Church–Turing thesis.[40] Today recursion theory is mostly concerned with the more refined problem of complexity classes—when is a problem efficiently solvable?—and the classification of degrees of unsolvability.[41]

Philosophical logic

Philosophical logic deals with formal descriptions of ordinary, non-specialist ("natural") language, that is strictly only about the arguments within philosophy's other branches. Most philosophers assume that the bulk of everyday reasoning can be captured in logic if a method or methods to translate ordinary language into that logic can be found. Philosophical logic is essentially a continuation of the traditional discipline called "logic" before the invention of mathematical logic. Philosophical logic has a much greater concern with the connection between natural language and logic. As a result, philosophical logicians have contributed a great deal to the development of non-standard logics (e.g. free logics, tense logics) as well as various extensions of classical logic (e.g. modal logics) and non-standard semantics for such logics (e.g. Kripke's supervaluationism in the semantics of logic).Logic and the philosophy of language are closely related. Philosophy of language has to do with the study of how our language engages and interacts with our thinking. Logic has an immediate impact on other areas of study. Studying logic and the relationship between logic and ordinary speech can help a person better structure his own arguments and critique the arguments of others. Many popular arguments are filled with errors because so many people are untrained in logic and unaware of how to formulate an argument correctly.[42][43]

Computational logic

A simple toggling circuit is expressed using a logic gate and a synchronous register.

In the 1950s and 1960s, researchers predicted that when human knowledge could be expressed using logic with mathematical notation, it would be possible to create a machine that reasons, or artificial intelligence. This was more difficult than expected because of the complexity of human reasoning. In logic programming, a program consists of a set of axioms and rules. Logic programming systems such as Prolog compute the consequences of the axioms and rules in order to answer a query.

Today, logic is extensively applied in the fields of artificial intelligence and computer science, and these fields provide a rich source of problems in formal and informal logic. Argumentation theory is one good example of how logic is being applied to artificial intelligence. The ACM Computing Classification System in particular regards:

- Section F.3 on Logics and meanings of programs and F.4 on Mathematical logic and formal languages as part of the theory of computer science: this work covers formal semantics of programming languages, as well as work of formal methods such as Hoare logic;

- Boolean logic as fundamental to computer hardware: particularly, the system's section B.2 on Arithmetic and logic structures, relating to operatives AND, NOT, and OR;

- Many fundamental logical formalisms are essential to section I.2 on artificial intelligence, for example modal logic and default logic in Knowledge representation formalisms and methods, Horn clauses in logic programming, and description logic.

Non-classical logic

The logics discussed above are all "bivalent" or "two-valued"; that is, they are most naturally understood as dividing propositions into true and false propositions. Non-classical logics are those systems that reject various rules of Classical logic.Hegel developed his own dialectic logic that extended Kant's transcendental logic but also brought it back to ground by assuring us that "neither in heaven nor in earth, neither in the world of mind nor of nature, is there anywhere such an abstract 'either–or' as the understanding maintains. Whatever exists is concrete, with difference and opposition in itself".[44]

In 1910, Nicolai A. Vasiliev extended the law of excluded middle and the law of contradiction and proposed the law of excluded fourth and logic tolerant to contradiction.[45] In the early 20th century Jan Łukasiewicz investigated the extension of the traditional true/false values to include a third value, "possible", so inventing ternary logic, the first multi-valued logic in the Western tradition.[46]

Logics such as fuzzy logic have since been devised with an infinite number of "degrees of truth", represented by a real number between 0 and 1.[47]

Intuitionistic logic was proposed by L.E.J. Brouwer as the correct logic for reasoning about mathematics, based upon his rejection of the law of the excluded middle as part of his intuitionism. Brouwer rejected formalization in mathematics, but his student Arend Heyting studied intuitionistic logic formally, as did Gerhard Gentzen. Intuitionistic logic is of great interest to computer scientists, as it is a constructive logic and sees many applications, such as extracting verified programs from proofs and influencing the design of programming languages through the formulae-as-types correspondence.

Modal logic is not truth conditional, and so it has often been proposed as a non-classical logic. However, modal logic is normally formalized with the principle of the excluded middle, and its relational semantics is bivalent, so this inclusion is disputable.

Controversies

"Is Logic Empirical?"

What is the epistemological status of the laws of logic? What sort of argument is appropriate for criticizing purported principles of logic? In an influential paper entitled "Is Logic Empirical?"[48] Hilary Putnam, building on a suggestion of W. V. Quine, argued that in general the facts of propositional logic have a similar epistemological status as facts about the physical universe, for example as the laws of mechanics or of general relativity, and in particular that what physicists have learned about quantum mechanics provides a compelling case for abandoning certain familiar principles of classical logic: if we want to be realists about the physical phenomena described by quantum theory, then we should abandon the principle of distributivity, substituting for classical logic the quantum logic proposed by Garrett Birkhoff and John von Neumann.[49]Another paper of the same name by Michael Dummett argues that Putnam's desire for realism mandates the law of distributivity.[50] Distributivity of logic is essential for the realist's understanding of how propositions are true of the world in just the same way as he has argued the principle of bivalence is. In this way, the question, "Is Logic Empirical?" can be seen to lead naturally into the fundamental controversy in metaphysics on realism versus anti-realism.

Implication: Strict or material

The notion of implication formalized in classical logic does not comfortably translate into natural language by means of "if ... then ...", due to a number of problems called the paradoxes of material implication.The first class of paradoxes involves counterfactuals, such as If the moon is made of green cheese, then 2+2=5, which are puzzling because natural language does not support the principle of explosion. Eliminating this class of paradoxes was the reason for C. I. Lewis's formulation of strict implication, which eventually led to more radically revisionist logics such as relevance logic.

The second class of paradoxes involves redundant premises, falsely suggesting that we know the succedent because of the antecedent: thus "if that man gets elected, granny will die" is materially true since granny is mortal, regardless of the man's election prospects. Such sentences violate the Gricean maxim of relevance, and can be modelled by logics that reject the principle of monotonicity of entailment, such as relevance logic.

Tolerating the impossible

Hegel was deeply critical of any simplified notion of the law of non-contradiction. It was based on Gottfried Wilhelm Leibniz's idea that this law of logic also requires a sufficient ground to specify from what point of view (or time) one says that something cannot contradict itself. A building, for example, both moves and does not move; the ground for the first is our solar system and for the second the earth. In Hegelian dialectic, the law of non-contradiction, of identity, itself relies upon difference and so is not independently assertable.Closely related to questions arising from the paradoxes of implication comes the suggestion that logic ought to tolerate inconsistency. Relevance logic and paraconsistent logic are the most important approaches here, though the concerns are different: a key consequence of classical logic and some of its rivals, such as intuitionistic logic, is that they respect the principle of explosion, which means that the logic collapses if it is capable of deriving a contradiction. Graham Priest, the main proponent of dialetheism, has argued for paraconsistency on the grounds that there are in fact, true contradictions.[51][clarification needed]

Rejection of logical truth

The philosophical vein of various kinds of skepticism contains many kinds of doubt and rejection of the various bases on which logic rests, such as the idea of logical form, correct inference, or meaning, typically leading to the conclusion that there are no logical truths. This is in contrast with the usual views in philosophical skepticism, where logic directs skeptical enquiry to doubt received wisdoms, as in the work of Sextus Empiricus.Friedrich Nietzsche provides a strong example of the rejection of the usual basis of logic: his radical rejection of idealization led him to reject truth as a "... mobile army of metaphors, metonyms, and anthropomorphisms—in short ... metaphors which are worn out and without sensuous power; coins which have lost their pictures and now matter only as metal, no longer as coins."[52] His rejection of truth did not lead him to reject the idea of either inference or logic completely, but rather suggested that "logic [came] into existence in man's head [out] of illogic, whose realm originally must have been immense. Innumerable beings who made inferences in a way different from ours perished".[53] Thus there is the idea that logical inference has a use as a tool for human survival, but that its existence does not support the existence of truth, nor does it have a reality beyond the instrumental: "Logic, too, also rests on assumptions that do not correspond to anything in the real world".[54]

This position held by Nietzsche however, has come under extreme scrutiny for several reasons. Some philosophers, such as Jürgen Habermas, claim his position is self-refuting—and accuse Nietzsche of not even having a coherent perspective, let alone a theory of knowledge.[55] Georg Lukács, in his book The Destruction of Reason, asserts that, "Were we to study Nietzsche's statements in this area from a logico-philosophical angle, we would be confronted by a dizzy chaos of the most lurid assertions, arbitrary and violently incompatible."[56] Bertrand Russell described Nietzsche's irrational claims with "He is fond of expressing himself paradoxically and with a view to shocking conventional readers" in his book A History of Western Philosophy.[57]

See also

Notes and references

- Russell, Bertrand (1945), A History of Western Philosophy And Its Connection with Political and Social Circumstances from the Earliest Times to the Present Day (PDF), Simon and Schuster, p. 762, archived from the original on 28 May 2014

Bibliography

- Barwise, J. (1982). Handbook of Mathematical Logic. Elsevier. ISBN 9780080933641.

- Belnap, N. (1977). "A useful four-valued logic". In Dunn & Eppstein, Modern uses of multiple-valued logic. Reidel: Boston.

- Bocheński, J. M. (1959). A précis of mathematical logic. Translated from the French and German editions by Otto Bird. D. Reidel, Dordrecht, South Holland.

- Bocheński, J. M. (1970). A history of formal logic. 2nd Edition. Translated and edited from the German edition by Ivo Thomas. Chelsea Publishing, New York.

- Brookshear, J. Glenn (1989). Theory of computation: formal languages, automata, and complexity. Redwood City, Calif.: Benjamin/Cummings Pub. Co. ISBN 0-8053-0143-7.

- Cohen, R.S, and Wartofsky, M.W. (1974). Logical and Epistemological Studies in Contemporary Physics. Boston Studies in the Philosophy of Science. D. Reidel Publishing Company: Dordrecht, Netherlands. ISBN 90-277-0377-9.

- Finkelstein, D. (1969). "Matter, Space, and Logic". in R.S. Cohen and M.W. Wartofsky (eds. 1974).

- Gabbay, D.M., and Guenthner, F. (eds., 2001–2005). Handbook of Philosophical Logic. 13 vols., 2nd edition. Kluwer Publishers: Dordrecht.

- Haack, Susan (1996). Deviant Logic, Fuzzy Logic: Beyond the Formalism, University of Chicago Press.

- Harper, Robert (2001). "Logic". Online Etymology Dictionary. Retrieved 8 May 2009.

- Hilbert, D., and Ackermann, W, (1928). Grundzüge der theoretischen Logik (Principles of Mathematical Logic). Springer-Verlag. OCLC 2085765

- Hodges, W. (2001). Logic. An introduction to Elementary Logic, Penguin Books.

- Hofweber, T. (2004), Logic and Ontology. Stanford Encyclopedia of Philosophy. Edward N. Zalta (ed.).

- Hughes, R.I.G. (1993, ed.). A Philosophical Companion to First-Order Logic. Hackett Publishing.

- Kline, Morris (1972). Mathematical Thought From Ancient to Modern Times. Oxford University Press. ISBN 0-19-506135-7.

- Kneale, William, and Kneale, Martha, (1962). The Development of Logic. Oxford University Press, London, UK.

- Liddell, Henry George; Scott, Robert. "Logikos". A Greek-English Lexicon. Perseus Project. Retrieved 8 May 2009.

- Mendelson, Elliott, (1964). Introduction to Mathematical Logic. Wadsworth & Brooks/Cole Advanced Books & Software: Monterey, Calif. OCLC 13580200

- Smith, B. (1989). "Logic and the Sachverhalt". The Monist 72(1): 52–69.

- Whitehead, Alfred North and Bertrand Russell (1910). Principia Mathematica. Cambridge University Press: Cambridge, England. OCLC 1041146

External links

| Library resources about Logic |

- Logic at PhilPapers

- Logic at the Indiana Philosophy Ontology Project

- "Logic". Internet Encyclopedia of Philosophy.

- Hazewinkel, Michiel, ed. (2001) [1994], "Logical calculus", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4

- An Outline for Verbal Logic

- Introductions and tutorials

- An Introduction to Philosophical Logic, by Paul Newall, aimed at beginners.

- forall x: an introduction to formal logic, by P.D. Magnus, covers sentential and quantified logic.

- Logic Self-Taught: A Workbook (originally prepared for on-line logic instruction).

- Nicholas Rescher. (1964). Introduction to Logic, St. Martin's Press.

- https://philpapers.org/rec/BECLAM (Logic: A Modern Guide by Colin Beckley) An introduction to formal logic.

- Essays

- "Symbolic Logic" and "The Game of Logic", Lewis Carroll, 1896.

- Math & Logic: The history of formal mathematical, logical, linguistic and methodological ideas. In The Dictionary of the History of Ideas.

- Online Tools

- Interactive Syllogistic Machine A web based syllogistic machine for exploring fallacies, figures, terms, and modes of syllogisms.

- Reference material

- Translation Tips, by Peter Suber, for translating from English into logical notation.

- Ontology and History of Logic. An Introduction with an annotated bibliography.

- Reading lists

- The London Philosophy Study Guide offers many suggestions on what to read, depending on the student's familiarity with the subject:

Languages

- Magnani, L. Abduction, Reason, and Science: Processes of Discovery and Explanation. Kluwer Academic Plenum Publishers, New York, 2001. xvii. 205 pages. Hardcover, ISBN 0-306-46514-0.

- R. Josephson, J. & G. Josephson, S. Abductive Inference: Computation, Philosophy, Technology. Cambridge University Press, New York & Cambridge (U.K.). viii. 306 pages. Hardcover (1994), ISBN 0-521-43461-0, Paperback (1996), ISBN 0-521-57545-1.

- Bunt, H. & Black, W. Abduction, Belief and Context in Dialogue: Studies in Computational Pragmatics. (Natural Language Processing, 1.) John Benjamins, Amsterdam & Philadelphia, 2000. vi. 471 pages. Hardcover, ISBN 90-272-4983-0, ISBN 1556197942

- "On the Logic of drawing History from Ancient Documents especially from Testimonies" (1901), Collected Papers v. 7, paragraph 219.

- "PAP" ["Prolegomena to an Apology for Pragmatism"], MS 293 c. 1906, New Elements of Mathematics v. 4, pp. 319–320.

- A Letter to F. A. Woods (1913), Collected Papers v. 8, paragraphs 385–388.

This paper consists of three parts. The first part deals with Frege's distinction between sense and reference of proper names and a similar distinction in Navya-Nyaya logic. In the second part we have compared Frege's definition of number to the Navya-Nyaya definition of number. In the third part we have shown how the study of the so-called 'restrictive conditions for universals' in Navya-Nyaya logic anticipated some of the developments of modern set theory.

untrained subjects are prone to commit various sorts of fallacies and mistakes.

Origin of life

Stromatolites from Bolivia, from the Proterozoic (2.3 bilion years ago). Vertical polished section.

Stromatolites growing in Yalgorup National Park in Australia

It is generally agreed that all life today evolved by common descent from a single primitive lifeform.[2] It is not known how this early form came about, but scientists think it was a natural process which took place perhaps 3,900 million years ago. This is in accord with the philosophy of naturalism: only natural causes are admitted.

It is not known whether metabolism or genetics came first. The main hypothesis which supports genetics first is the RNA world hypothesis, and the one which supports metabolism first is the protein world hypothesis.

Another big problem is how cells develop. All existing forms of life are built out of cells.[3]

Melvin Calvin, winner of the Nobel Prize in Chemistry, wrote a book on the subject,[4] and so did Alexander Oparin.[5] What links most of the early work on the origin of life is the idea that before life began there must have been a process of chemical change.[6] Another question which has been discussed by J.D. Bernal and others is the origin of the cell membrane. By concentrating the chemicals in one place, the cell membrane performs a vital function.[7]

Many religions teach that life did not evolve spontaneously, but was deliberately created by a god. Such theories are a part of creationism. Some '"old earth" creationists believe in a slower creation that is generally more compatible with the known sciences of today. Other "new earth" creationists claim this happened within the last few thousand years, which is much more recent than the fossil record suggests. The lack of evidence for such views means that almost all scientists do not accept them.

Fossil record

-4500 —

–

-4000 —

–

-3500 —

–

-3000 —

–

-2500 —

–

-2000 —

–

-1500 —

–

-1000 —

–

-500 —

–

0 —

Earliest claimed life on Earth

The earliest claimed lifeforms are fossilized microorganisms (or microfossils). They were found in iron and silica-rich rocks which were once hydrothermal vents in the Nuvvuagittuq greenstone belt of Quebec, Canada.These rocks are as old as 4.28 billion years. The tubular forms they contain are shown in a report.[8] If this is the oldest record of life on Earth, it suggests "an almost instantaneous emergence of life" after oceans formed 4.4 billion years ago.[9][10][11] According to Stephen Blair Hedges, "If life arose relatively quickly on Earth… then it could be common in the universe".[12]

Previous earliest

A scientific study from 2002 showed that geological formations of stromatolites 3.45 billion years old contain fossilized cyanobacteria.[13][14] At the time it was widely agreed that stromatolites were oldest known lifeform on Earth which had left a record of its existence. Therefore, if life originated on Earth, this happened sometime between 4.4 billion years ago, when water vapor first liquefied,[15] and 3.5 billion years ago. This is the background to the latest discovery discussed above.Earliest evidence of life comes from the Isua supercrustal belt in Western Greenland and from similar formations in the nearby Akilia Islands. This is because a high level of the lighter isotope of carbon is found there. Living things uptake lighter isotopes because this takes less energy. Carbon entering into rock formations has a concentration of elemental δ13C of about −5.5. of 12C, biomass has a δ13C of between −20 and −30. These isotopic fingerprints are preserved in the rocks. With this evidence, Mojzis suggested that life existed on the planet already by 3.85 billion years ago.[16]

A few scientists think life might have been carried from planet to planet by the transport of spores. This idea, now known as panspermia, was first put forward by Arrhenius.[17]

History of studies into the origin of life

Alexander Oparin (right) at the laboratory

Spontaneous generation

Until the early 19th century many people believed in the regular spontaneous generation of life from non-living matter. This was called spontaneous generation, and was disproved by Louis Pasteur. He showed that without spores no bacteria or viruses grew on sterile material.Darwin

In a letter to Joseph Dalton Hooker on 11 February 1871,[18] Charles Darwin proposed a natural process for the origin of life.He suggested that the original spark of life may have begun in a "warm little pond, with all sorts of ammonia and phosphoric salts, lights, heat, electricity, etc. A protein compound was then chemically formed ready to undergo still more complex changes". He went on to explain that "at the present day such matter would be instantly devoured or absorbed, which would not have been the case before living creatures were formed".[19]

Haldane and Oparin