People love to say that their busy. Their busy on the phone or their PC or now more readily to the apply this written piece more readily to explain more simply, people are busy on everything. Direct to subject at-hand is the direct correlation to the computer. The computer is based on the brain. When the computer is busy than you must wait by simply putting it down, and you are aware that you can call the carrier i.e. GOOGLE and find out if its shutdown or just re-booting yet GOOGLE increased it's RAM.

The brain is not getting enough connection because it is going to slow. Fast Food back in the day was a good way to get some energy however the rally from the Americans at large made Fast Food an enemy and said its not good for you, yet, all know that it gets us home when we're tired. Please understand that food is not enough to feed the brain anymore and people need to stop entertaining autonomous vehicles as a fix. Should you not be able to drive than you should not be behind the wheel period.

What Americans don't understand about this simply analogy is ridiculous. The computer is able to explain it all simply as you are losing your five senses:

Jump to Five "traditional" senses - [edit]. See also: Five wits § The "outward" wits. This article needs additional citations for verification.

Five senses refers to the five traditionally recognized methods of perception, or sense: taste, sight, touch, smell, and sound. Five senses or The Five Senses may

People also ask

What are the 5 senses?

What are the 5 common senses?

What are the 6 Senses?

What is the definition of the 5 senses?

What are the six senses?

What are the five human senses?

"Humans have five basic senses: sight, hearing, smell, taste and touch. Humans have five basic senses: touch, sight, hearing, smell and taste.

The sensing organs associated with each sense send information to the

brain to help us understand and perceive the world around us.Oct 23, 2017" as found on GOOGLE

GOOGLE search bar: "Five senses refers to the five traditionally recognized methods of perception, or sense: taste, sight, touch, smell, and sound. Five senses or The Fiv"

About 5 results on GOOGLE: https://www.google.com/search?q=Five+senses+refers+to+the+five+traditionally+recognized+methods+of+perception%2C+or+sense%3A+taste%2C+sight%2C+touch%2C+smell%2C+and+sound.+Five+senses+or+The+Fiv&ie=utf-8&oe=utf-8&client=firefox-b-1

Sight, Sound, Smell, Taste, and Touch: How the Human Body Receives Sensory Information. A diagram of the five senses ... (hearing), smell (olfaction), taste (

A sense is a physiological capacity of organisms that provides data for perception

Oct 23, 2017 - Humans have five basic senses: touch, sight, hearing, smell and taste. The sensing organs associated with each sense send information to the ...

We traditionally refer to the five senses of sight, hearing, taste, smell, and touch—

These senses are touch, taste, smell, sight and sound. Learn about the ... The ways we understand and perceive the world around us as humans are known as senses. We have five traditional senses known as taste, smell, touch, hearing and sight. ... The sense of smell, or olfaction, is closely related to the sense of taste.

Random-access memory is a form of computer data storage that stores data and machine code currently being used. A random-access memory device allows ...

Web results

Random Access Memory

(or simply RAM) is the memory or information storage in a computer that

is used to store running programs and data for the programs.

In computing, memory

refers to the computer hardware integrated circuits that store

information for immediate use in a computer; it is synonymous with the

term "primary storage". Computer memory operates at a high speed, for example random-access ...

Random-access memory (RAM /ræm/) is a form of computer data storage that stores data and machine code currently being used. A random-access memory device allows data items to be read

or written in almost the same amount of time irrespective of the

physical location of data inside the memory. In contrast, with other

direct-access data storage media such as hard disks, CD-RWs, DVD-RWs and the older magnetic tapes and drum memory,

the time required to read and write data items varies significantly

depending on their physical locations on the recording medium, due to

mechanical limitations such as media rotation speeds and arm movement.

RAM contains multiplexing and demultiplexing circuitry, to connect the data lines to the addressed storage for reading or writing the entry. Usually more than one bit of storage is accessed by the same address, and RAM devices often have multiple data lines and are said to be "8-bit" or "16-bit", etc. devices.

In today's technology, random-access memory takes the form of integrated circuits. RAM is normally associated with volatile types of memory (such as DRAM modules), where stored information is lost if power is removed, although non-volatile RAM has also been developed.[1] Other types of non-volatile memories exist that allow random access for read operations, but either do not allow write operations or have other kinds of limitations on them. These include most types of ROM and a type of flash memory called NOR-Flash.

Integrated-circuit RAM chips came into the market in the early 1970s, with the first commercially available DRAM chip, the Intel 1103, introduced in October 1970.[2]

Early computers used relays, mechanical counters[3] or delay lines for main memory functions. Ultrasonic delay lines could only reproduce data in the order it was written. Drum memory

could be expanded at relatively low cost but efficient retrieval of

memory items required knowledge of the physical layout of the drum to

optimize speed. Latches built out of vacuum tube triodes, and later, out of discrete transistors,

were used for smaller and faster memories such as registers. Such

registers were relatively large and too costly to use for large amounts

of data; generally only a few dozen or few hundred bits of such memory

could be provided.

The first practical form of random-access memory was the Williams tube starting in 1947. It stored data as electrically charged spots on the face of a cathode ray tube. Since the electron beam of the CRT could read and write the spots on the tube in any order, memory was random access. The capacity of the Williams tube was a few hundred to around a thousand bits, but it was much smaller, faster, and more power-efficient than using individual vacuum tube latches. Developed at the University of Manchester in England, the Williams tube provided the medium on which the first electronically stored program was implemented in the Manchester Baby computer, which first successfully ran a program on 21 June 1948.[4] In fact, rather than the Williams tube memory being designed for the Baby, the Baby was a testbed to demonstrate the reliability of the memory.[5][6]

Magnetic-core memory was invented in 1947 and developed up until the mid-1970s. It became a widespread form of random-access memory, relying on an array of magnetized rings. By changing the sense of each ring's magnetization, data could be stored with one bit stored per ring. Since every ring had a combination of address wires to select and read or write it, access to any memory location in any sequence was possible.

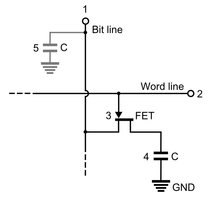

Magnetic core memory was the standard form of memory system until displaced by solid-state memory in integrated circuits, starting in the early 1970s. Dynamic random-access memory (DRAM) allowed replacement of a 4 or 6-transistor latch circuit by a single transistor for each memory bit, greatly increasing memory density at the cost of volatility. Data was stored in the tiny capacitance of each transistor, and had to be periodically refreshed every few milliseconds before the charge could leak away. The Toshiba Toscal BC-1411 electronic calculator, which was introduced in 1965,[7][8] used a form of DRAM built from discrete components.[8] DRAM was then developed by Robert H. Dennard in 1968.

Prior to the development of integrated read-only memory (ROM) circuits, permanent (or read-only) random-access memory was often constructed using diode matrices driven by address decoders, or specially wound core rope memory planes.[citation needed]

Both static and dynamic RAM are considered volatile, as their state is lost or reset when power is removed from the system. By contrast, read-only memory (ROM) stores data by permanently enabling or disabling selected transistors, such that the memory cannot be altered. Writeable variants of ROM (such as EEPROM and flash memory) share properties of both ROM and RAM, enabling data to persist without power and to be updated without requiring special equipment. These persistent forms of semiconductor ROM include USB flash drives, memory cards for cameras and portable devices, and solid-state drives. ECC memory (which can be either SRAM or DRAM) includes special circuitry to detect and/or correct random faults (memory errors) in the stored data, using parity bits or error correction codes.

In general, the term RAM refers solely to solid-state memory devices (either DRAM or SRAM), and more specifically the main memory in most computers. In optical storage, the term DVD-RAM is somewhat of a misnomer since, unlike CD-RW or DVD-RW it does not need to be erased before reuse. Nevertheless, a DVD-RAM behaves much like a hard disc drive if somewhat slower.

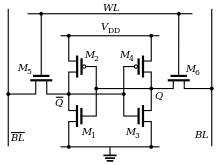

In SRAM, the memory cell is a type of flip-flop circuit, usually implemented using FETs. This means that SRAM requires very low power when not being accessed, but it is expensive and has low storage density.

A second type, DRAM, is based around a capacitor. Charging and discharging this capacitor can store a "1" or a "0" in the cell. However, the charge in this capacitor slowly leaks away, and must be refreshed periodically. Because of this refresh process, DRAM uses more power, but it can achieve greater storage densities and lower unit costs compared to SRAM.

Usually several memory cells share the same address. For example, a 4 bit 'wide' RAM chip has 4 memory cells for each address. Often the width of the memory and that of the microprocessor are different, for a 32 bit microprocessor, eight 4 bit RAM chips would be needed.

Often more addresses are needed than can be provided by a device. In that case, external multiplexors to the device are used to activate the correct device that is being accessed.

In many modern personal computers, the RAM comes in an easily upgraded form of modules called memory modules or DRAM modules about the size of a few sticks of chewing gum. These can quickly be replaced should they become damaged or when changing needs demand more storage capacity. As suggested above, smaller amounts of RAM (mostly SRAM) are also integrated in the CPU and other ICs on the motherboard, as well as in hard-drives, CD-ROMs, and several other parts of the computer system.

In addition to serving as temporary storage and working space for the

operating system and applications, RAM is used in numerous other ways.

As a common example, the BIOS in typical personal computers often has an option called “use shadow BIOS” or similar. When enabled, functions that rely on data from the BIOS’s ROM instead use DRAM locations (most can also toggle shadowing of video card ROM or other ROM sections). Depending on the system, this may not result in increased performance, and may cause incompatibilities. For example, some hardware may be inaccessible to the operating system if shadow RAM is used. On some systems the benefit may be hypothetical because the BIOS is not used after booting in favor of direct hardware access. Free memory is reduced by the size of the shadowed ROMs.[9]

Since 2006, "solid-state drives" (based on flash memory) with capacities exceeding 256 gigabytes and performance far exceeding traditional disks have become available. This development has started to blur the definition between traditional random-access memory and "disks", dramatically reducing the difference in performance.

Some kinds of random-access memory, such as "EcoRAM", are specifically designed for server farms, where low power consumption is more important than speed.[12]

CPU speed improvements slowed significantly partly due to major physical barriers and partly because current CPU designs have already hit the memory wall in some sense. Intel summarized these causes in a 2005 document.[14]

A different concept is the processor-memory performance gap, which can be addressed by 3D integrated circuits that reduce the distance between the logic and memory aspects that are further apart in a 2D chip.[16] Memory subsystem design requires a focus on the gap, which is widening over time.[17] The main method of bridging the gap is the use of caches; small amounts of high-speed memory that houses recent operations and instructions nearby the processor, speeding up the execution of those operations or instructions in cases where they are called upon frequently. Multiple levels of caching have been developed to deal with the widening gap, and the performance of high-speed modern computers relies on evolving caching techniques.[18] These can prevent the loss of processor performance, as it takes less time to perform the computation it has been initiated to complete.[19] There can be up to a 53% difference between the growth in speed of processor speeds and the lagging speed of main memory access.[20]

In contrast, RAM can be as fast as 5766 MB/s vs 477 MB/s for an SSD.[21]

Gallagher, Sean. "Memory that never forgets: non-volatile DIMMs hit the market". Ars Technica. Archived from the original on 2017-07-08.

Bellis, Mary. "The Invention of the Intel 1103".

"IBM Archives -- FAQ's for Products and Services". ibm.com. Archived from the original on 2012-10-23.

Napper, Brian, Computer 50: The University of Manchester Celebrates the Birth of the Modern Computer, archived from the original on 4 May 2012, retrieved 26 May 2012

Williams, F.C.; Kilburn, T. (Sep 1948), "Electronic Digital Computers", Nature, 162 (4117): 487, doi:10.1038/162487a0. Reprinted in The Origins of Digital Computers

Williams, F.C.; Kilburn, T.; Tootill, G.C. (Feb 1951), "Universal High-Speed Digital Computers: A Small-Scale Experimental Machine", Proc. IEE, 98 (61): 13–28, doi:10.1049/pi-2.1951.0004, archived from the original on 2013-11-17.

Toscal BC-1411 calculator Archived 2017-07-29 at the Wayback Machine., Science Museum, London

Toshiba "Toscal" BC-1411 Desktop Calculator Archived 2007-05-20 at the Wayback Machine.

"Shadow Ram". Archived from the original on 2006-10-29. Retrieved 2007-07-24.

The Emergence of Practical MRAM "Archived copy" (PDF). Archived from the original (PDF) on 2011-04-27. Retrieved 2009-07-20.

"Tower invests in Crocus, tips MRAM foundry deal". EETimes. Archived from the original on 2012-01-19.

"EcoRAM held up as less power-hungry option than DRAM for server farms" Archived 2008-06-30 at the Wayback Machine.

by Heather Clancy 2008

The term was coined in "Archived copy" (PDF). Archived (PDF) from the original on 2012-04-06. Retrieved 2011-12-14..

"Platform 2015: Intel® Processor and Platform Evolution for the Next Decade" (PDF). March 2, 2005. Archived (PDF) from the original on April 27, 2011.

Agarwal, Vikas; Hrishikesh, M. S.; Keckler, Stephen W.; Burger, Doug (June 10–14, 2000). "Clock Rate versus IPC: The End of the Road for Conventional Microarchitectures" (PDF). Proceedings of the 27th Annual International Symposium on Computer Architecture. 27th Annual International Symposium on Computer Architecture. Vancouver, BC. Retrieved 14 July 2018.

Rainer Waser (2012). Nanoelectronics and Information Technology. John Wiley & Sons. p. 790. Archived from the original on August 1, 2016. Retrieved March 31, 2014.

Chris Jesshope and Colin Egan (2006). Advances

in Computer Systems Architecture: 11th Asia-Pacific Conference, ACSAC

2006, Shanghai, China, September 6-8, 2006, Proceedings. Springer. p. 109. Archived from the original on August 1, 2016. Retrieved March 31, 2014.

Ahmed Amine Jerraya and Wayne Wolf (2005). Multiprocessor Systems-on-chips. Morgan Kaufmann. pp. 90–91. Archived from the original on August 1, 2016. Retrieved March 31, 2014.

Impact of Advances in Computing and Communications Technologies on Chemical Science and Technology. National Academy Press. 1999. p. 110. Archived from the original on August 1, 2016. Retrieved March 31, 2014.

Celso C. Ribeiro and Simone L. Martins (2004). Experimental

and Efficient Algorithms: Third International Workshop, WEA 2004, Angra

Dos Reis, Brazil, May 25-28, 2004, Proceedings, Volume 3. Springer. p. 529. Archived from the original on August 1, 2016. Retrieved March 31, 2014.

Random-access memory

From Wikipedia, the free encyclopedia

Jump to navigation

Jump to search

"RAM" redirects here. For the Daft Punk album, see Random Access Memories. For other uses, see Ram (disambiguation).

| Computer memory types |

|---|

| Volatile |

| RAM |

| Historical |

|

| Non-volatile |

| ROM |

| NVRAM |

| Early stage NVRAM |

| Magnetic |

| Optical |

| In development |

| Historical |

|

Example of writable volatile random-access memory: Synchronous Dynamic RAM modules, primarily used as main memory in personal computers, workstations, and servers.

RAM contains multiplexing and demultiplexing circuitry, to connect the data lines to the addressed storage for reading or writing the entry. Usually more than one bit of storage is accessed by the same address, and RAM devices often have multiple data lines and are said to be "8-bit" or "16-bit", etc. devices.

In today's technology, random-access memory takes the form of integrated circuits. RAM is normally associated with volatile types of memory (such as DRAM modules), where stored information is lost if power is removed, although non-volatile RAM has also been developed.[1] Other types of non-volatile memories exist that allow random access for read operations, but either do not allow write operations or have other kinds of limitations on them. These include most types of ROM and a type of flash memory called NOR-Flash.

Integrated-circuit RAM chips came into the market in the early 1970s, with the first commercially available DRAM chip, the Intel 1103, introduced in October 1970.[2]

Contents

History

These IBM tabulating machines from the 1930s used mechanical counters to store information

A portion of a core memory with a modern flash SD card on top

1 Megabit chip – one of the last models developed by VEB Carl Zeiss Jena in 1989

The first practical form of random-access memory was the Williams tube starting in 1947. It stored data as electrically charged spots on the face of a cathode ray tube. Since the electron beam of the CRT could read and write the spots on the tube in any order, memory was random access. The capacity of the Williams tube was a few hundred to around a thousand bits, but it was much smaller, faster, and more power-efficient than using individual vacuum tube latches. Developed at the University of Manchester in England, the Williams tube provided the medium on which the first electronically stored program was implemented in the Manchester Baby computer, which first successfully ran a program on 21 June 1948.[4] In fact, rather than the Williams tube memory being designed for the Baby, the Baby was a testbed to demonstrate the reliability of the memory.[5][6]

Magnetic-core memory was invented in 1947 and developed up until the mid-1970s. It became a widespread form of random-access memory, relying on an array of magnetized rings. By changing the sense of each ring's magnetization, data could be stored with one bit stored per ring. Since every ring had a combination of address wires to select and read or write it, access to any memory location in any sequence was possible.

Magnetic core memory was the standard form of memory system until displaced by solid-state memory in integrated circuits, starting in the early 1970s. Dynamic random-access memory (DRAM) allowed replacement of a 4 or 6-transistor latch circuit by a single transistor for each memory bit, greatly increasing memory density at the cost of volatility. Data was stored in the tiny capacitance of each transistor, and had to be periodically refreshed every few milliseconds before the charge could leak away. The Toshiba Toscal BC-1411 electronic calculator, which was introduced in 1965,[7][8] used a form of DRAM built from discrete components.[8] DRAM was then developed by Robert H. Dennard in 1968.

Prior to the development of integrated read-only memory (ROM) circuits, permanent (or read-only) random-access memory was often constructed using diode matrices driven by address decoders, or specially wound core rope memory planes.[citation needed]

Types of random-access memory

The two widely used forms of modern RAM are static RAM (SRAM) and dynamic RAM (DRAM). In SRAM, a bit of data is stored using the state of a six transistor memory cell. This form of RAM is more expensive to produce, but is generally faster and requires less dynamic power than DRAM. In modern computers, SRAM is often used as cache memory for the CPU. DRAM stores a bit of data using a transistor and capacitor pair, which together comprise a DRAM cell. The capacitor holds a high or low charge (1 or 0, respectively), and the transistor acts as a switch that lets the control circuitry on the chip read the capacitor's state of charge or change it. As this form of memory is less expensive to produce than static RAM, it is the predominant form of computer memory used in modern computers.Both static and dynamic RAM are considered volatile, as their state is lost or reset when power is removed from the system. By contrast, read-only memory (ROM) stores data by permanently enabling or disabling selected transistors, such that the memory cannot be altered. Writeable variants of ROM (such as EEPROM and flash memory) share properties of both ROM and RAM, enabling data to persist without power and to be updated without requiring special equipment. These persistent forms of semiconductor ROM include USB flash drives, memory cards for cameras and portable devices, and solid-state drives. ECC memory (which can be either SRAM or DRAM) includes special circuitry to detect and/or correct random faults (memory errors) in the stored data, using parity bits or error correction codes.

In general, the term RAM refers solely to solid-state memory devices (either DRAM or SRAM), and more specifically the main memory in most computers. In optical storage, the term DVD-RAM is somewhat of a misnomer since, unlike CD-RW or DVD-RW it does not need to be erased before reuse. Nevertheless, a DVD-RAM behaves much like a hard disc drive if somewhat slower.

Memory cell

Main article: Memory cell (computing)

The memory cell is the fundamental building block of computer memory. The memory cell is an electronic circuit that stores one bit

of binary information and it must be set to store a logic 1 (high

voltage level) and reset to store a logic 0 (low voltage level). Its

value is maintained/stored until it is changed by the set/reset process.

The value in the memory cell can be accessed by reading it.

In SRAM, the memory cell is a type of flip-flop circuit, usually implemented using FETs. This means that SRAM requires very low power when not being accessed, but it is expensive and has low storage density.

A second type, DRAM, is based around a capacitor. Charging and discharging this capacitor can store a "1" or a "0" in the cell. However, the charge in this capacitor slowly leaks away, and must be refreshed periodically. Because of this refresh process, DRAM uses more power, but it can achieve greater storage densities and lower unit costs compared to SRAM.

SRAM Cell (6 Transistors)

|

DRAM Cell (1 Transistor and one capacitor)

|

Addressing

To be useful, memory cells must be readable and writeable. Within the RAM device, multiplexing and demultiplexing circuitry is used to select memory cells. Typically, a RAM device has a set of address lines A0... An, and for each combination of bits that may be applied to these lines, a set of memory cells are activated. Due to this addressing, RAM devices virtually always have a memory capacity that is a power of two.Usually several memory cells share the same address. For example, a 4 bit 'wide' RAM chip has 4 memory cells for each address. Often the width of the memory and that of the microprocessor are different, for a 32 bit microprocessor, eight 4 bit RAM chips would be needed.

Often more addresses are needed than can be provided by a device. In that case, external multiplexors to the device are used to activate the correct device that is being accessed.

Memory hierarchy

Main article: Memory hierarchy

One can read and over-write data in RAM. Many computer systems have a memory hierarchy consisting of processor registers, on-die SRAM caches, external caches, DRAM, paging systems and virtual memory or swap space

on a hard drive. This entire pool of memory may be referred to as "RAM"

by many developers, even though the various subsystems can have very

different access times, violating the original concept behind the random access term in RAM. Even within a hierarchy level such as DRAM, the specific row, column, bank, rank, channel, or interleave organization of the components make the access time variable, although not to the extent that access time to rotating storage media

or a tape is variable. The overall goal of using a memory hierarchy is

to obtain the highest possible average access performance while

minimizing the total cost of the entire memory system (generally, the

memory hierarchy follows the access time with the fast CPU registers at

the top and the slow hard drive at the bottom).

In many modern personal computers, the RAM comes in an easily upgraded form of modules called memory modules or DRAM modules about the size of a few sticks of chewing gum. These can quickly be replaced should they become damaged or when changing needs demand more storage capacity. As suggested above, smaller amounts of RAM (mostly SRAM) are also integrated in the CPU and other ICs on the motherboard, as well as in hard-drives, CD-ROMs, and several other parts of the computer system.

Other uses of RAM

A stick of laptop RAM, roughly half the size of desktop RAM.

Virtual memory

Main article: Virtual memory

Most modern operating systems employ a method of extending RAM

capacity, known as "virtual memory". A portion of the computer's hard drive is set aside for a paging file or a scratch partition,

and the combination of physical RAM and the paging file form the

system's total memory. (For example, if a computer has 2 GB of RAM and a

1 GB page file, the operating system has 3 GB total memory available to

it.) When the system runs low on physical memory, it can "swap"

portions of RAM to the paging file to make room for new data, as well

as to read previously swapped information back into RAM. Excessive use

of this mechanism results in thrashing and generally hampers overall system performance, mainly because hard drives are far slower than RAM.

RAM disk

Main article: RAM drive

Software can "partition" a portion of a computer's RAM, allowing it to act as a much faster hard drive that is called a RAM disk. A RAM disk loses the stored data when the computer is shut down, unless memory is arranged to have a standby battery source.

Shadow RAM

Sometimes, the contents of a relatively slow ROM chip are copied to read/write memory to allow for shorter access times. The ROM chip is then disabled while the initialized memory locations are switched in on the same block of addresses (often write-protected). This process, sometimes called shadowing, is fairly common in both computers and embedded systems.As a common example, the BIOS in typical personal computers often has an option called “use shadow BIOS” or similar. When enabled, functions that rely on data from the BIOS’s ROM instead use DRAM locations (most can also toggle shadowing of video card ROM or other ROM sections). Depending on the system, this may not result in increased performance, and may cause incompatibilities. For example, some hardware may be inaccessible to the operating system if shadow RAM is used. On some systems the benefit may be hypothetical because the BIOS is not used after booting in favor of direct hardware access. Free memory is reduced by the size of the shadowed ROMs.[9]

Recent developments

Several new types of non-volatile RAM, which preserve data while powered down, are under development. The technologies used include carbon nanotubes and approaches utilizing Tunnel magnetoresistance. Amongst the 1st generation MRAM, a 128 KiB (128 × 210 bytes) chip was manufactured with 0.18 µm technology in the summer of 2003.[citation needed] In June 2004, Infineon Technologies unveiled a 16 MiB (16 × 220 bytes) prototype again based on 0.18 µm technology. There are two 2nd generation techniques currently in development: thermal-assisted switching (TAS)[10] which is being developed by Crocus Technology, and spin-transfer torque (STT) on which Crocus, Hynix, IBM, and several other companies are working.[11] Nantero built a functioning carbon nanotube memory prototype 10 GiB (10 × 230 bytes) array in 2004. Whether some of these technologies can eventually take significant market share from either DRAM, SRAM, or flash-memory technology, however, remains to be seen.Since 2006, "solid-state drives" (based on flash memory) with capacities exceeding 256 gigabytes and performance far exceeding traditional disks have become available. This development has started to blur the definition between traditional random-access memory and "disks", dramatically reducing the difference in performance.

Some kinds of random-access memory, such as "EcoRAM", are specifically designed for server farms, where low power consumption is more important than speed.[12]

Memory wall

The "memory wall" is the growing disparity of speed between CPU and memory outside the CPU chip. An important reason for this disparity is the limited communication bandwidth beyond chip boundaries, which is also referred to as bandwidth wall. From 1986 to 2000, CPU speed improved at an annual rate of 55% while memory speed only improved at 10%. Given these trends, it was expected that memory latency would become an overwhelming bottleneck in computer performance.[13]CPU speed improvements slowed significantly partly due to major physical barriers and partly because current CPU designs have already hit the memory wall in some sense. Intel summarized these causes in a 2005 document.[14]

First of all, as chip geometries shrink and clock frequencies rise, the transistor leakage current increases, leading to excess power consumption and heat... Secondly, the advantages of higher clock speeds are in part negated by memory latency, since memory access times have not been able to keep pace with increasing clock frequencies. Third, for certain applications, traditional serial architectures are becoming less efficient as processors get faster (due to the so-called Von Neumann bottleneck), further undercutting any gains that frequency increases might otherwise buy. In addition, partly due to limitations in the means of producing inductance within solid state devices, resistance-capacitance (RC) delays in signal transmission are growing as feature sizes shrink, imposing an additional bottleneck that frequency increases don't address.The RC delays in signal transmission were also noted in "Clock Rate versus IPC: The End of the Road for Conventional Microarchitectures"[15] which projected a maximum of 12.5% average annual CPU performance improvement between 2000 and 2014.

A different concept is the processor-memory performance gap, which can be addressed by 3D integrated circuits that reduce the distance between the logic and memory aspects that are further apart in a 2D chip.[16] Memory subsystem design requires a focus on the gap, which is widening over time.[17] The main method of bridging the gap is the use of caches; small amounts of high-speed memory that houses recent operations and instructions nearby the processor, speeding up the execution of those operations or instructions in cases where they are called upon frequently. Multiple levels of caching have been developed to deal with the widening gap, and the performance of high-speed modern computers relies on evolving caching techniques.[18] These can prevent the loss of processor performance, as it takes less time to perform the computation it has been initiated to complete.[19] There can be up to a 53% difference between the growth in speed of processor speeds and the lagging speed of main memory access.[20]

In contrast, RAM can be as fast as 5766 MB/s vs 477 MB/s for an SSD.[21]

See also

References

- Pinola, Melanie. "Add a RAM Disk to Your Computer for Faster-than-SSD Performance". Lifehacker. Archived from the original on 10 September 2017. Retrieved 10 September 2017.

External links

Media related to RAM at Wikimedia Commons

Media related to RAM at Wikimedia Commons

Navigation menu

Interaction

Tools

Print/export

In other projects

Languages

- This page was last edited on 2 August 2018, at 05:26 (UTC).

- Text is available under the Creative Commons Attribution-ShareAlike License; additional terms may apply. By using this site, you agree to the Terms of Use and Privacy Policy. Wikipedia® is a registered trademark of the Wikimedia Foundation, Inc., a non-profit organization.

No comments:

Post a Comment